what was the first wrong turn in theoretical physics [?] ...No, SO(10) is not really simpler and prettier, and such thinking was indeed a wrong turn in 1973 that lead theoretical physicists into a 40-year dead-end.

There would be "more moderate" groups that would identify the grand unification as the first wrong turn, or supersymmetric field theories as the first wrong turn, or bosonic string theory, or superstring theory, or non-perturbative string theory, or M-theory, or the flux vacua, or something else.

I've met members of every single one of these groups. ...

Some critics of the evolutionary biology say that zebras and horses may have a common ancestor but zebras and llamas can't. Does it make any sense? ...

The case of the critics of physics is completely analogous. If grand unification were the first wrong turn, how do you justify that the group SU(3)×SU(2)×U(1) is "allowed" to be studied in physics, while SO(10) is already blasphemous or "unscientific" (their word for "blasphemous")? It doesn't make the slightest sense. They're two groups and both of them admit models that are consistent with everything we know. SO(10) is really simpler and prettier ...

You justify the group SU(3)×SU(2)×U(1) because all those group parameters were grounded in experimental observations. SU(3) is the 3-color-quark theory of strong interactions, SU(2) is the weak (beta decay), and U(1) is electromagnetism. SO(10) adds many new particles and phenomena that have never been observed and have no experimental basis.

Supposed SO(10) unifies the forces, but it doesn't. It doesn't reduce the number of coupling constants or experimentally-determined parameters. It doesn't make the theory or analysis any easier.

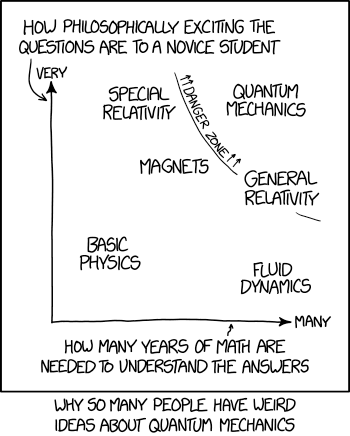

I have a theory that this wrong turn, and also a bunch of other subsequent ones, were rooted in some misconcepted about big physics successes of the past, notably relativity and quantum mechanics.

Those theories were grounded in experiment, but there is a widespread belief that Einstein should get all the credit for relativity because he ignored the experiments and carried out the supposedly essential step of elevating principles to postulates. I wrote a book on How Einstein Ruined Physics, explaining the damage from this warped view of Einstein.

So physicists came to believe that all the glory in physics goes to those who do such sterile theorizing. Hence grand unified theories that do not actually unify or explain anything.