More and more, Physics popularizers like Sean M. Carroll tell that we have to accept Many-Worlds, as the only intellectually respectable interpretation of quantum mechanics. Furthermore, it is logically implied by Schroedinger's equation, and Occam's Razor requires acceptance. As a bonus, it is completely deterministic, so we have no free will.

This is so crazy, it deserves to be mocked.

Nicolas Gisin writes in a new paper:

Newton never

pretended that his physics were complete.

And so, the dictatorship of Determinism was tolerable

to free men.

Then came quantum physics. At first, free men celebrated the revolution of intrinsic randomness in the material world. This was the end of the awful dictator De-

terminism, or so they thought. But this dictator had a

son. . . or was it his grandson?

Determinism returned in the new guise of quantum

physics without randomness: everything, absolutely everything, all alternatives, would equally happen, all on

an equal footing. Real choices were no longer possible.

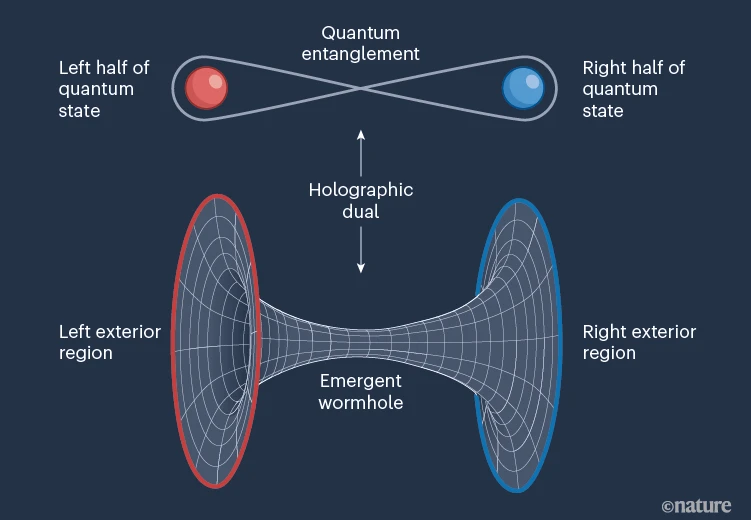

But the most terrible was still to come: universal entanglement. According to the new multiversal dictator, not

only did the material world obey deterministic laws, but

it was all one big monstrous piece, everything entangled

with everything else. There was no room left for any

pineal gland, no possible interface between physics and

free-will. The sources of all forces, all fields, everything

was part of the big Ψ, the wavefunction of the multiverse,

as the dictator bade people call their new God.

...

It’s time to take a step back. I am a free being, I

enjoy free-will. I know that much more than anything

else. How then, could an equation, even a truly beauti-

ful equation, tell me I’m wrong? I know that I am free

much more intimately than I will ever know any equation.

Hence, and despite the grandiloquent speeches, I know in

my gut that the Schrodinger equation can’t be the full

story; there must be something else. “But what?”, reply

the dictator’s priests. Admittedly, I don’t know, but I

know the multiverse hypothesis is wrong, simply because

I know determinism is a sham[4–9]

He is right. You know it is bogus when Carroll tells us that Occam's Razor and the beauty of the Schroeding equation require us to believe that zillions of new universes are being created every second. When you think you are making a decision, you are just creating new universes where everything possible happens.

You do not have to understand the mathematics of the Schroeding equation to know that this is foolishness.

Here is a reply to Gisin.

According to compatibilism, it is perfectly possible

that our will is compatible with a causally closed world.

But this may seem to be a too simplistic semantic trick

to avoid the problem, and there is more to be said.

But how can indeterminism allow free-will? How would

it help if our decisions are not fully determined by our

own present state, but by occasional randomness break-

ing into the causal chain?

Wouldn’t we be more free if we can determine our next

decisions based on how we are now, rather than letting

them at the mercy of randomness?

When the super-smart AI robots take over the world, I expect them to use sneaky philosophical

arguments like this to convince people that the truest possible freedom is

to become a slave to a deterministic algorithm.

But why being restricted to a unique choice would

mean more freedom than making all possible choices in

different worlds? A world in which we can choose only

one thing and all the others are forbidden restricts our

freedom. MWI allows us to follow Yogi Bera’s advice,

When you come to a fork in the road, take it.

So true freedom is a child's imagination, where anything can happen.

Could it be true that in MWI the histories in which

Shakespeare produced randomly both great and bad lit-

erature overwhelmingly dominate the multiverse? If

MWI gives the same probabilities as standard QM,

Shakespeare should create consistently great or consis-

tently bad literature in most histories.

So how would entanglement limit creativity?

It is hard to believe physicists say this stuff seriously.

Here is another attempted rebuttal. I guess the Gisin paper touched a nerve.

Second, the concept of free will is vague and ill-defined

- so it is a shaky basis to build a general argument against

a given physical theory. Of course we all experience (and

enjoy) the feeling of making our decisions spontaneously

and autonomously, and it is comforting to know that this

feeling is not in contradiction with our most fundamental

understanding of the universe; but in order to understand

exactly how physical laws allow for free will (or conscious-

ness, or creativity – call it whatever you like), one needs

a physical theory of it, which we currently do not have.

In other words, we all experience free will, but our physics cannot explain it, so we should just go with theories that make it impossible.

Another argument against unitary quantum theory

is that its only available interpretation is the so-called

“Many-world” interpretation ...

Unitary quantum theory is consistent; it provides a

good explanation of all the experimental observations so

far, and (unlike some of its stochastic variants) it is also

compatible with properties of general relativity, such as

locality and the equivalence principle.

Yes, unitary QM is just another name for Many-worlds, and it has never been able to explain any experiments or had any compatibility advantages with relativity.

In regular QM, you collect some data, make a wave function, compute a prediction, do an experiment, and get a definite outcome. Then you collapse the wave function to incorporate the new info.

In a unitary theory, all the possible outcomes that did not happen must live on somehow. We do not see them, so we suppose them to be in the parallel worlds. So the theory does not really predict anything, because it says all things happen invisibly.

Saying that the unitary QM theory is consistent and explains experiments is just nonsense. The theory says anything can happen. It only explains experiments in the sense that whatever we see is one of the possibilities in a theory saying everything is a possibility. That's all. The theory cannot even say that some outcomes are more probable than others.

Here is a recent podcast from another free will denier. He says most physicists say that QM has inherent indeterminacies, but he subscribes to superdeterminism. He goes on to explain that Schroedinger's cat is either alive or dead, and long-term weather predictions may be impossible due to chaos. Okay, but no free will? He returns to the subject at 1:33:20. His only reluctance to accept full determinism is that he does not want to excuse Hitler for moral responsibility.