NOVELIST Martin Amis once said we are about five Einsteins away from explaining the universe's existence. "His estimate seemed about right to me," says Jim Holt at the beginning of his book Why Does the World Exist? "But I wondered," he continues, "could any of those Einsteins be around today? It was obviously not my place to aspire to be one of them. But if I could find one, or maybe two or three or even four of them, and then sort of arrange them in the right order... well, that would be an excellent quest." Thus begins his humorous yet deeply profound journey to solve the great mystery: why is there something rather than nothing? ...Sounds as if he does not take the subject too seriously. I saw Larry Krauss on the Comedy channel Colbert Report claiming that there is no God and physics can explain a universe from nothing, but he got stumped on whether something could come from the nothing that is God.

For instance, Holt writes that a theory of everything, uniting relativity and quantum mechanics, would be the closest science can get to an explanation for existence. "But the final theory of physics would still leave a residue of mystery - why this force, why this law?" Holt writes. "It would not live up to the principle that every fact must have an explanation: the Principle of Sufficient Reason. On the face of it, the only theory that does obey this principle is the Theory of Nothingness. That is why it's surprising that the Theory of Nothingness turns out to be false, that there is a world of Something."

Tuesday, June 26, 2012

New book on existence

Saturday, June 23, 2012

Needle of mathematical consistency

…Gil and other modern skeptics need a theory of physics which is compatible with existing quantum mechanics, existing observations of noise processes, existing classical computers, and potential future reductions of noise to sub-threshold (but still constant) rates, all while ruling out large-scale quantum computers. Such a ropy camel-shaped theory would have difficulty in passing through the needle of mathematical consistency.That is saying that it is hard to construct a mathematically consistent theory that excludes quantum computers. But it is also hard to construct a mathematically consistent theory that includes quantum computers.

My FQXi essay tries to explain the foolishness of this "needle of mathematical consistency" argument. There is a similar argument about Bell test experiments. They are unremarkable except that many physicists and philosophers find that they conflict with their prejudices about mathematical needles, whatever they are.

His comment is like saying that gravity is impossible because it is hard to see how quantum gravity would pass through the needle of mathematical consistency. People are always making weird consistency arguments about quantum mechanics. If your take those people seriously, then there is no reality. But you take them just seriously enough to believe in quantum computers? I say that quantum computers are impossible.

Thursday, June 21, 2012

The Socrates cave allegory

The idea was described by the ancient Greek philosopher Socrates in about 400 BC, and written by Plato as the Allegory of the Cave. People in the cave see shadows, and do not appreciate the 3-D nature of the objects causing the shadows. They are seeing a 2-D projection of 3-D objects.

A photograph is also a 2-D projection of a 3-D scene. A measurement with a meter stick is a 1-D projection. Other observations can also be viewed as projections of some more complex reality.

Quantum mechanics is the first theory to truly take the cave allegory seriously. It has a theory for how observations correspond to projections, without ever trying to explain what is outside the cave. The theory concocts representations of reality, but is never sure about what the reality is.

Much of the confusion about quantum mechanics occurs when people try to ask about what the theory says about reality. It does not directly say anything about reality. It describes projections of that reality into subspaces, and predicts observations.

Tuesday, June 19, 2012

FQXi essay contest

I am trying to resist the temptation to badmouth my competition.

The first phase of the contest is to collect ratings at the above FQXi site. Some essays will get low ratings because the readers think that the author is wrong. But the whole idea of the essay contest is to produce essays that say everyone else is wrong about something. So if someone gets a very high rating, I will be suspicious that he did not write a sufficiently provocative essay. Of course I want a high rating anyway, as the higher-rated essay will get read more and taken more seriously. Maybe the ideal essay to leave the reader thinking, "Sounds convincing, but it cannot be right or someone would have said that before." Or maybe, "At first I thought the proposal was absurd, but now I think that it is obvious."

You can also download the essay here on my site, where the references are clickable links. You can also comment on it at the FQXi site.

Monday, June 18, 2012

Physical reality hypothesis

I propose what I call the physical reality hypothesis. It says that all physical systems, from a single electron up to the entire universe, have an objective physical reality, but no faithful mathematical model.

By "objective", I mean independent of any observer. Different observers might still see different things, if the act of observation interferes with the system. By "faithful", I mean an error-free representation of the physics.

This hypothesis is contrary to the Weak mathematical universe hypothesis.

Since 1926, Heisenberg and Bohr have based quantum mechanics on the idea that observations can be accurately modeled mathematically, but not the underlying physical reality. Von Neumann showed in 1930 that straightforward attempts to mathematize the physical reality with hidden variables fail. Bohm found an exception to von Neumann's proof, but only by abandoning causality. His work has been a dead-end.

The Bell test experiments showed that electron and photon spin cannot be mathematized (with local hidden variables) independent of observation.

So this physical reality hypothesis is squarely within mainstream physics. It is very hard to make sense out of 20th century physics otherwise.

But nevertheless many people seem to implicitly assume that the hypothesis is false. For example, a quantum computer is characterized by:

In general, a quantum computer with n qubits can be in an arbitrary superposition of up to 2n different states simultaneously (this compares to a normal computer that can only be in one of these 2n states at any one time). A quantum computer operates by manipulating those qubits with a fixed sequence of quantum logic gates.So believers in quantum computing seem to think that they can create arbitrary superpositions, that these mathematical superpositions will perfectly reflect reality, and that these states can be perfectly manipulated by the transformations corresponding to quantum gates. These superpositions and transformations must be accurate to many decimal places because of the way quantum algorithms work.

The quantum computer scientists are always saying that their theory is a consequence of what we know about quantum mechanics. It seems to me that it is the opposite. The lesson of 20C quantum mechanics is that we do not have faithful representations of n-qubit states or any other complex state.

Sunday, June 17, 2012

Finding the Higgs mechanism and boson

The Higgs was discovered in the 70's when the standard model was put together; this is just the latest confirmation of the standard model. It once again disputes all the naysayers, who think the heirarchy problem is fake. No. The higgs is real. The standard model is real. Its properties, such as the heirarchy problem, are real. Nature has spoken, we have listened.I agree with it. The Higgs mechanism is an essential part of the Standard Model of high-energy physics and has been quantitatively confirmed since the 1970s. The value of the mass was unknown, and there is always the possibility of some other explanation for the data, but the Higgs was discovered in the 1970s.

There is a separate Wikipedia article on the Higgs boson, where it is described as "a hypothetical elementary particle predicted by the Standard Model (SM) of particle physics." According to rumors at the above blog, the boson has been found to have a mass of 125 GeV, and it will be announced by the LHC as soon as all the data are double-checked.

There will be a fight for credit for the Higgs boson. Part of the problem is that Nobel prizes are not given for theoretical physics, so no prize was given for the Higgs mechanism. So some physicists will be claiming that they should be credited now for work that they did in the 1960s.

It did not really take 50 years to appreciate the value of that work. By 1970, it was clear that the Higgs mechanism gave an important class of models that were advantageous over all the known alternatives. By 1975, we were getting experimental confirmation of those models. The recent LHC experiments add the value of the Higgs mass, but the theoretical and experimental evidence for the SM with Higgs mechanism had been known for decades.

In sum, the Higgs mechanism was proposed by Anderson in 1962, adapted to the SM in the following decade, and experimentally confirmed in the 1970s. The Higgs boson was proposed by Higgs in 1964, and is just now being experimentally confirmed by the LHC (if the rumors are correct).

Friday, June 15, 2012

Following wrong relativity lessons

Quantum gravity physicist Carlo Rovelli writes an essay:

The prototype of this way of thinking, I think the example that makes it more clear, is Einstein's discovery of special relativity. On the one hand there was Newtonian mechanics, which was extremely successful with its empirical content. On the other hand there was Maxwell's theory, with its empirical content, which was extremely successful, too. But there was a contradiction between the two.This is all nonsense, as Einstein was not the one to discover special relativity. I exaplain it in my book, How Einstein Ruined Physics.

If Einstein had gone to school to learn what science is, if he had read Kuhn, and the philosopher explaining what science is, if he was any one of my colleagues today who are looking for a solution of the big problem of physics today, what would he do?

He would say, okay, the empirical content is the strong part of the theory. The idea in classical mechanics that velocity is relative: forget about it. The Maxwell equations, forget about them. Because this is a volatile part of our knowledge. The theories themselves have to be changed, okay? What we keep solid is the data, and we modify the theory so that it makes sense coherently, and coherently with the data.

That's not at all what Einstein does. Einstein does the contrary. He takes the theories very seriously. He believes the theory. He says, look, classical mechanics is so successful that when it says that velocity is relative, we should take it seriously, and we should believe it. And the Maxwell equations are so successful that we should believe the Maxwell equations. He has so much trust in the theory itself, in the qualitative content of the theory, that qualitative content that Kuhn says changes all the time, that we learned not to take too seriously, and so much faith in this, confidence in that, that he's ready to do what? To force coherence between these two, the two theories, by challenging something completely different, which is something that is in our head, which is how we think about time.

Wednesday, June 13, 2012

Laplacian predictability

The last century saw dramatic challenges to the Laplacian predictability which had underpinned scientific research for around 300 years. Basic to this was Alan Turing’s 1936 discovery (along with Alonzo Church) of the existence of unsolvable problems.He is referring to Laplace's demon. Did that silly idea really underpin scientific research for 300 years?

Laplace was wrong about predictability for many reasons, and most of them have nothing to do with Turing. We never had a strongly predictable theory of particles, and they would be chaotic even if we did.

Tuesday, June 12, 2012

Physicists against philosophy

Lawrence Krauss ... responded to the review by calling the philosopher who wrote it “moronic” and arguing that philosophy, unlike physics, makes no progress and is rather boring, if not totally useless. ...No, philosophers are not nice to science. Most of them subscribe to a theory of Kuhnian paradigm shifts. This theory says that science does not make progress towards truth, and new theories are not measurably superior to old ones. It is a direct attack on what science is all about.

This is hardly the first occasion on which physicists have made disobliging comments about philosophy. Last year at a Google “Zeitgeist conference” in England, Stephen Hawking declared that philosophy was “dead.” Another great physicist, the Nobel laureate Steven Weinberg, has written that he finds philosophy “murky and inconsequential” and of no value to him as a working scientist. And Richard Feynman, in his famous lectures on physics, complained that “philosophers are always with us, struggling in the periphery to try to tell us something, but they never really understand the subtleties and depths of the problem.”

Why do physicists have to be so churlish toward philosophy? Philosophers, on the whole, have been much nicer about science.

That being said, Krauss wrote a pretentious and crappy book. If he writes about philosophy, then he should be willing to defend himself against criticisms from philosophers.

Mr. Weinberg has attacked philosophical doctrines like “positivism” (which says that science should concern itself only with things that can actually be observed). But positivism happens to be a mantle in which Mr. Hawking proudly wraps himself; he has declared that he is “a positivist who believes that physical theories are just mathematical models we construct, and that it is meaningless to ask if they correspond to reality.”Hawking said that in his 1996 book with Penrose, Nature of Space and Time. He repudiated that in his 2010 book with Mlodinow, The Grand Design, where he advocates M-theory and Model-dependent realism. No positivist would ever speak in favor of M-theory.

It is hard to take psychology seriously as long as S. Freud is its biggest hero, hard to take anthropology seriously as long as M. Mead is its biggest hero, and hard to take philosophy of science seriously as long as T. Kuhn is its biggest hero.

Today the world of physics is in many ways conceptually unsettled. Will physicists ever find an interpretation of quantum mechanics that makes sense? Is “quantum entanglement” logically consistent with special relativity? Is string theory empirically meaningful? How are time and entropy related? Can the constants of physics be explained by appeal to an unobservable “multiverse”? Philosophers have in recent decades produced sophisticated and illuminating work on all these questions. It would be a pity if physicists were to ignore it.

Monday, June 11, 2012

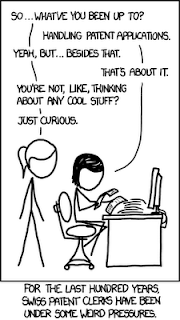

Pressures on Swiss patent clerks

This is today's xkcd comic.

A. Einstein is the only man to get a Nobel Prize in the sciences for work done in his spare time, while working some other day job. That is part of his mystique. Ever since, he is used in all sorts of arguments about human potential, sponsored research, and paradigm shifts.

The myth is exaggerated, of course. Einstein was not some disconnected dilettante. He studied physics at top European universities, and received the equivalent of a PhD in physics. His famous 1905 papers were based on work he did as a student, and he discussed them with professors. One of his 1905 papers was a polished version of his dissertation. The details are in his biographies. He worked as a clerk because he could not find an academic job. His relativity paper gets the most credit, but it was not really so original.

It would be nice to see a true example of physics being advanced by someone outside the meanstream establishment. It ought to be possible, since all the leading knowledge is published. I just don't know of any good examples.

Sunday, June 10, 2012

Dark energy is an aether

Probably the biggest single misconception I come across in popular discussions of dark matter and dark energy is the accusation that these concepts are a return to the discredited idea of the aether. They are not -- in fact, they are precisely the opposite.I do not agree with this. The main experimental prediction of the aether was that light has wave properties, and has the same properties everywhere.

Back in the later years of the 19th century, physicists had put together an incredibly successful synthesis of electricity and magnetism, topped by the work of James Clerk Maxwell. They had managed to show that these two apparently distinct phenomena were different manifestations of a single underlying "electromagnetism." One of Maxwell's personal triumphs was to show that this new theory implied the existence of waves traveling at the speed of light -- indeed, these waves are light, not to mention radio waves and X-rays and the rest of the electromagnetic radiation spectrum.

The puzzle was that waves were supposed to represent oscillations in some underlying substance, like water waves on an ocean. If light was an electromagnetic wave, what was "waving"? The proposed answer was the aether, sometimes called the "luminiferous aether" to distinguish it from the classical element. This idea had a direct implication: that Maxwell's description of electromagnetism would be appropriate as long as we were at rest with respect to the aether, but that its predictions (for the speed of light, for example) would change as we moved through the aether. The hunt was to find experimental evidence for this idea, but attempts came up short. The Michelson-Morley experiment, in particular, implied that the speed of light did not change as the Earth moved through space, in apparent contradiction with the aether idea.

So the aether was a theoretical idea that never found experimental support. In 1905 Einstein pointed out how to preserve the symmetries of Maxwell's equations without referring to aether at all, in the special theory of relativity, and the idea was relegated to the trash bin of scientific history.

Aether was a concept introduced by physicists for theoretical reasons, which died because its experimental predictions were ruled out by observation. Dark matter and dark energy are the opposite: they are concepts that theoretical physicists never wanted, but which are forced on us by the observations.

Here is what Michelson concluded in his 1881 paper, The Relative Motion of the Earth and the Luminiferous Ether:

The result of the hypothesis of a stationary ether is thus shown to be incorrect, and the necessary conclusion follows that the hypothesis is erroneous.So only a particular aether hypothesis was negated. Lorentz later showed in 1895 that this conclusion was false, and that the experiment was consistent with an aether at rest. Lorentz himself said said in 1895, "It is not my intention to ... express assumptions about the nature of the aether."This conclusion directly contradicts the explanation of the phenomenon of aberration which has been hitherto generally accepted, and which presupposes that the earth moves through the ether, the latter remaining at rest.

Contrary to Carroll, the aether was not necessarily thought to have a “frame of rest” in the 1800s. See Maxwell's 1878 essay for a survey of aether theories.

Dark energy is the discovery that the ubiquitous and uniform aether has positive energy and negative pressure. We do not know that the energy has a role in the transmission of light, so there may be more than one aether. But it fits right in with the aethers that Maxwell wrote about.

Friday, June 8, 2012

Rejecting hidden variables

No such assumption was necessary for the original Copenhagen interpretation. The wave function cannot be observed directly, but possibilities for it are inferred from previous observations. Those functions evolve according to the Schroedinger equation, and can be used to predict observables. Making another observation allows us to restrict the set of possibilities for that wave function.

Physicists seem to be split on whether measurement causes a wavefunction collapse. If it does, then they are split over whether the collapse is objective, or related to observer consciousness. If there is no collapse, then they are split over whether part of the wave function escapes to another world where parallel observers see different outcomes.

I say that these dilemmas are all nonsense, and that the core mistake is to assume that the universe has a faithful mathematical representation and that the wave function is real. It is better to assume that there is no such representation, that there are no hidden variables, that there the wave function is not real but just a reflection of our knowledge about previous observations.

Matt Leifer gives a modern explanation of why hidden variables don't work. He has his own peculiar terminology, says that these are the only acceptable views:

Many people who advocate a psi-epistemic view also adopt an anti-realist or neo-Copenhagen point of view on quantum theory in which the quantum state does not represent knowledge about some underlying reality, but rather it only represents knowledge about the consequences of measurements that we might make on the system. ...So he says that maybe the wave function is real (psi-ontic) and there are some nonlocal hidden variables, or the wave function is subjective (psi-epistemic) and there is no underlying reality, as Bohr said:As emphasized by Harrigan and Spekkens, a variant of the EPR argument favoured by Einstein shows that any psi-ontic hidden variable theory must be nonlocal.

There is no quantum world. There is only an abstract physical description. It is wrong to think that the task of physics is to find out how nature is. Physics concerns what we can say about nature…Leifer cannot accept that, and insists on ontic states (where some math formula faithfully matches reality).

It seems to me that searching for psi-ontic states or for an underlying mathematical reality is just continuing to fall for the hidden variable fallacy. It is better to do quantum mechanics without any hidden variable assumption. Go ahead and assume that there is an underlying physical reality, but do not assume that it is faithfully represented by hidden variables. Observations have mathematical values, but not the physical reality.

Wednesday, June 6, 2012

Free will theorem

I say that free will is not a scientific issue.

I have criticized Free will in the multiverse.

On the physics side, the Free will theorem claims that human free will is linked to quantum indeterminism.

The theorem states that, given the axioms, if the two experimenters in question are free to make choices about what measurements to take, then the results of the measurements cannot be determined by anything previous to the experiments.I don't see how this theorem says anything about free will. The theorem has three plausible sounding axioms, but it also assumes a deterministic model for producing photon pairs. According to the model, the photon seem to have some random aspects that observers can see if they are allowed to make their own random choices. So the theorem shows that the models are really indeterministic.

This is all just a fancy way of saying that there are quantum experiments that look random. This doesn't mean much as coin tosses look random also, but could be deterministic if you knew more about the coin.

Tuesday, June 5, 2012

Coyne says freedom was killed by science

What is important to me is whether our decisions are predetermined (with perhaps a dollop of quantum indeterminacy), and therefore we lose our freedom to really make different choices when given alternatives. The old notion of true freedom — the ability to do otherwise — has been killed dead by science. Why are people trying to save the notion of free will by confecting other definitions? Why aren’t they, instead, telling the faithful that they can’t really choose whether to be saved or make Jesus their personal saviour? The faithful are dualists, and religion is our enemy.This is ridiculous. He likes to lecture us on what science is all about, but he gets it badly wrong. His rant was triggered by atheist-physicist Victor Stenger, who writes:

Research in neuroscience has revealed a startling fact that revolutionizes much of what we humans have previously taken for granted about our interactions with the world outside our heads: Our consciousness is really not in charge of our behavior.I have criticized Coyne and others on free will here, here, and here. I say that free will is not a scientific issue.

If science really proved these things, ask yourself: Where is the published paper? Who got the Nobel Prize? These scientists are an embarrassment to science.

The "dollop of quantum indeterminacy" is especially strange. Stenger argues:

The moving parts of the brain are heavy by microscopic standards and move around at relatively high speeds because the brain is hot. Furthermore, the distances involved are large by these same microscopic standards. It is easy to demonstrate quantitatively that quantum effects in the brain are not significant. So, even though libertarians are correct that determinism is false at the microphysical, quantum level, the brain is for all practical purposes a deterministic Newtonian machine, so we don't have free will as they define it.This argument is frequently made, but I do not believe it. There are chemical reactions in the brain, and quantum effects are essential for all such reactions. So I do not know how anyone can say that quantum effects are insignificant. And even if that were true, it does not follow that the brain is a deterministic Newtonian machine. No one has succeeded in predicting brain behavior, except in trivial ways.

Update: I agree with this podcast today by Pigliucci and Churchland (at about 50:00) that it is stupid to say that "free speech is an illusion", as Coyne, Stenger, and Sam Harris say. Yes, it is an illusion in the trivial sense that a table is an illusion. If "illusion" means that there is a materialist explanation in terms of smaller components, then it is an illusion. But if it is an attempt to deny that we can evaluate consequences and make decisions, then they are manifestly wrong. They are denying the facts and presenting an anti-science view of the world.

Massimo Pigliucci responds:

Roger, your comment reflects the attitude of people like Harris, Coyne, Rosenberg et al., but I think is too quick. First, science cannot disprove an incoherent concept like contra-causal free will. Indeed, philosophy can do that (because it's a matter of logic). Second, this whole thing has very little to do with religion, because religionists believe in contra-causal free will, which is not the target of this research. Third, there is a danger in uncritically accepting Harris-like sweeping statements about human volition, and that danger is that we throw out human agency, ethics, and pretty much everything that makes us human, quickly leading nihilism (which in fact Rosenberg at least openly embraces).

Monday, June 4, 2012

Explained by local deterministic processes

The puzzling properties of entangled quantum states, first noted by Einstein, Podolsky and Rosen, who suspected that quantum mechanics is not a complete theory, were dramatically delineated by the remarkable work of John Bell. Bell showed that certain statistical correlations between properties of two physically separated particles, which were produced in an entangled quantum state, cannot be explained by any theory of local deterministic processes even if other unobserved properties (‘hidden variables') are allowed for.None of this is correct. Entanglement was part of quantum mechanics before the 1935 EPR paper. Einstein got it from Popper's 1934 experiment. EPR was about uncertainty principle and completeness, not spin and determinism. More importantly, Bell's theorem only shows that quantum mechanics differs from certain local hidden variable theories. It says nothing about local deterministic processes.

The Wolf statement is like saying, "relativity experiments like Michelson-Morley show that light cannot be explained by any theory of space and time, even if the aether is allowed for. Well, no. Relativity explains Michelson-Morley and quantum mechanics explains spin entanglement.

Relativity does require some adjustments to your preconceptions about space and time, and quantum mechanics requires some adjustments to your preconceptions about hidden variables. If you refuse to make those adjustments, and insist on making your own assumptions, then sure, you will get some contradictions. That is what Bell and those Wolf Prize winners discovered. But they did not discover any experiments that cannot be explained by local deterministic processes.

Sunday, June 3, 2012

Freud fled the Nazis

There is a growing body of literature that points to the ongoing legacy of Freud’s work for understandings of questions of race and cultural difference, as well as for understandings of the political frameworks through which these questions of difference are addressed. The ways in which both psychoanalysis and Freud himself were persecuted as Jewish by Nazis and their collaborators have been well documented. As Karen Brecht and others have pointed out, “During the 1930s, while there were about 56 members of the German Psychoanalytic Society (Deutsche Psychoanalytische Gesellschaft, DPG); only nine were German ‘Aryans.’ ... Most of Germany’s Jewish psychoanalysts fled from Germany and Nazi-occupied Europe in the early 1930s; ...I don't get why this is so controversial. It appears to be well-sourced and not any more controversial that a lot of other Freud opinions.

While Freud himself defined psychoanalysis in universalist terms, a number of scholars have argued that psychoanalysis can nonetheless be understood as a Jewish science. Sander Gilman has argued that psychoanalysis can be understood as a Jewish science not simply because many of its founders were themselves Jewish and because psychoanalysis was attacked as a Jewish science, but because Freud’s development of psychoanalysis was itself informed by the ways in which Jews were pathologized. He argues that Freud made the Jew invisible in psychoanalysis in response to the ways in which Jews were pathologized, and that “the new science of psychoanalysis provided status for the Jew as scientist while re-forming the idea of medical science to exclude the debate about the implication of race.”

Saturday, June 2, 2012

No scientific model for some problems

Note what Chomsky is saying: that neither behaviorism nor any other scientific model can explain — or is even close to explaining — how humans learn language, which is arguably our defining trait. (In spite of his own emphasis on the genetic underpinnings of language, Chomsky has been cruelly dismissive of evolutionary psychology, which he once called a “philosophy of mind with a little bit of science thrown in.”)So Skinner and Freud continue to be idolized, long after they have been discredited, because of low standards in the field of psychology.In a recent column on philosopher of science Thomas Kuhn, I pointed out that some fields, especially “hard” ones like physics and chemistry, converge on a paradigm and rapidly progress, while others “remain in a state of constant flux.” Fields that address human thought and behavior—anthropology, economics, sociology, political science, psychology – are prime example of research endeavors that lurch faddishly from one paradigm to another.

Will psychologists ever find a paradigm powerful enough to unify the field and help it achieve the rigor of, say, nuclear physics or molecular biology? William James had his doubts. More than a century ago he fretted that psychology might never transcend its “confused and imperfect state.” Harvard psychologist Howard Gardner has argued that James’s concerns “have proved all too justified. Psychology has not added up to an integrated science, and it is unlikely ever to achieve that status.” Gardner once told me that questions about free will, the self, consciousness and other topics with which psychologists (and, tellingly, philosophers) wrestle might not be amenable to conventional scientific reductionism, in spite of all the advances of modern genetics, neuroscience and brain imaging.

I am told that Chomsky's views are in the minority, even tho everyone agrees that they were brilliant.

I believe that even the hard sciences have problems that are not solved by any scientific model, and they will never be. Free will is one. Physicists have lots of opinions about what is deterministic or probabilistic, but those opinions have no scientific basis.