Humanism, Science, and the Radical Expansion of the PossibleI am not trying to summarize this, or even to comment on the merits of her arguments. I just want to point out how bad physics makes its way into humanities essays.

Why we shouldn’t let neuroscience banish mystery from human life.

Humanism was the particular glory of the Renaissance. ...

The antidote to our gloom is to be found in contemporary science. ...

The phenomenon called quantum entanglement, relatively old as theory and thoroughly demonstrated as fact, raises fundamental questions about time and space, and therefore about causality.

Particles that are “entangled,” however distant from one another, undergo the same changes simultaneously. This fact challenges our most deeply embedded habits of thought. To try to imagine any event occurring outside the constraints of locality and sequence is difficult enough. Then there is the problem of conceiving of a universe in which the old rituals of cause and effect seem a gross inefficiency beside the elegance and sleight of hand that operate discreetly beyond the reach of all but the most rarefied scientific inference and observation. However pervasive and robust entanglement is or is not, it implies a cosmos that unfolds or emerges on principles that bear scant analogy to the universe of common sense. It is abetted in this by string theory, which adds seven unexpressed dimensions to our familiar four. And, of course, those four seem suddenly tenuous when the fundamental character of time and space is being called into question. Mathematics, ontology, and metaphysics have become one thing. Einstein’s universe seems mechanistic in comparison. Newton’s, the work of a tinkerer. If Galileo shocked the world by removing the sun from its place, so to speak, then this polyglot army of mathematicians and cosmologists who offer always new grounds for new conceptions of absolute reality should dazzle us all, freeing us at last from the circle of old Urizen’s compass. But we are not free. ...

I find the soul a valuable concept, a statement of the dignity of a human life and of the unutterable gravity of human action and experience. ...

I am content to place humankind at the center of Creation. ...

I am a theist, so my habits of mind have a particular character.

Friday, October 30, 2015

Trying to apply entanglement to humanism

Marilynne Robinson writes in The Nation, an extreme left-wing magazine:

Wednesday, October 28, 2015

Krauss against philosophers

Physicist Lawrence Krauss says in a interview:

He notes that the BICEP2 tried (unsuccessfully) to find evidence of quantization of an inflaton field, a related issue.

Krauss was burned by a philosopher of physics who wrote a NY Times book review trashing Krauss's title, and without addressing the content of the book. Yeah, the title was overstated, but the reviewer should have been able to get past the title.

Freeman Dyson, who is a brilliant physicist and a contrarian, he had pointed out based on some research — he’s 90 years old, but he had done some research over the years — I was in a meeting in Singapore with him when he pointed out that we really don’t know if gravity is a quantum theory. Electromagnetism is a quantum theory because we know there are quanta of electromagnetism called photons. Right now they’re coming, shining in my face and they’re going into the camera that’s being used to record this and we can measure photons. There are quanta associated with all of the forces of nature. If gravity is a quantum theory, then there must be quanta that are exchanged, that convey the gravitational force; we call those gravitons. They’re the quantum version of gravitational waves, the same way photons are the quantum version of electromagnetic waves. But what Freeman pointed out is that there’s no terrestrial experiment that could ever measure a single graviton. He could show that in order to build an experiment that would do that, you’d have to make the experiment so massive that it would actually collapse to form a black hole before you could make the measurement. So he said there’s no way we’re ever going to measure gravitons; there’s no way that we’ll know whether gravity is a quantum theory.This is correct. The whole subject of quantum gravity should be considered part of theology or something else, because it is not science.

He notes that the BICEP2 tried (unsuccessfully) to find evidence of quantization of an inflaton field, a related issue.

Does physics need philosophy?Philosophers have rejected XX century science, and have become more anti-science than most creationists. So asking whether physics needs philosophers is like asking whether biology needs creationists or whether astronomy needs astrologers.

We all do philosophy and of course, scientists do philosophy. Philosophy is critical reasoning, logical reasoning, and analysis—so in that sense, of course physics needs philosophy. But does it need philosophers? That’s the question. And the answer is not so much anymore. I mean it did early on. The earlier physicists were philosophers. When the questions weren’t well defined, that’s when philosophy becomes critically important and so physics grew out of natural philosophy, but it’s grown out of it, and now there’s very little relationship between what physicists do and what even philosophers of science do. So of course physics needs philosophy; it just doesn’t need philosophers.

Krauss was burned by a philosopher of physics who wrote a NY Times book review trashing Krauss's title, and without addressing the content of the book. Yeah, the title was overstated, but the reviewer should have been able to get past the title.

Monday, October 26, 2015

Foundation of probability theory

When physicists talk about chance, probability, and determinism, they are nearly always hopelessly confused. See, for example, the recent Delft experiment claiming to prove that nature is random.

Whether electron have some truly random behavior is a bit like talking about whether humans have free will. It certainly appears so, and there is even a relation between the concepts, and quantum mechanics leaves the possibilities open.

Randomness is tricky to define. Mathematicians have thought very carefully about the issues, and reached a XXc consensus on how to formulate it. So it is best to look at what they say.

UCLA math professor Terry Tao is teaching a class on probability theory, and this is from his introductory notes:

Quantum mechanics often predicts probabilities, and physicists often say that this means that nature is random. But as you can see, mathematicians deal with probability and random variables in completely deterministic models, so probability formulas do not imply true randomness.

The Schroedinger equation is deterministic and time-reversible. And yet quantum mechanics is usually understood as indeterministic and irreversible. Is this a problem? No, not really. As you can see, mathematicians are always using deterministic formulas to model probability. These issues drive physicists like Sean M. Carroll to believe in the many-worlds interpretation (MWI), but that conclusion is entirely mistaken.

Whether electron have some truly random behavior is a bit like talking about whether humans have free will. It certainly appears so, and there is even a relation between the concepts, and quantum mechanics leaves the possibilities open.

Randomness is tricky to define. Mathematicians have thought very carefully about the issues, and reached a XXc consensus on how to formulate it. So it is best to look at what they say.

UCLA math professor Terry Tao is teaching a class on probability theory, and this is from his introductory notes:

By default, mathematical reasoning is understood to take place in a deterministic mathematical universe. In such a universe, any given mathematical statement S (that is to say, a sentence with no free variables) is either true or false, with no intermediate truth value available. Similarly, any deterministic variable x can take on only one specific value at a time.Note his mention of the map-territory distinction, that I have emphasized here many times. Randomness is described by ordinary mathematical construction that look non-random. All of the formulas and theorems about randomness can be derived without any belief in true randomness. It is not clear that there is any such thing as true randomness. Randomness is a matter of interpretation.

However, for a variety of reasons, both within pure mathematics and in the applications of mathematics to other disciplines, it is often desirable to have a rigorous mathematical framework in which one can discuss non-deterministic statements and variables – that is to say, statements which are not always true or always false, but in some intermediate state, or variables that do not take one particular value or another with definite certainty, but are again in some intermediate state. In probability theory, which is by far the most widely adopted mathematical framework to formally capture the concept of non-determinism, non-deterministic statements are referred to as events, and non-deterministic variables are referred to as random variables. In the standard foundations of probability theory, as laid out by Kolmogorov, we can then model these events and random variables by introducing a sample space (which will be given the structure of a probability space) to capture all the ambient sources of randomness; events are then modeled as measurable subsets of this sample space, and random variables are modeled as measurable functions on this sample space. (We will briefly discuss a more abstract way to set up probability theory, as well as other frameworks to capture non-determinism than classical probability theory, at the end of this set of notes; however, the rest of the course will be concerned exclusively with classical probability theory using the orthodox Kolmogorov models.)

Note carefully that sample spaces (and their attendant structures) will be used to model probabilistic concepts, rather than to actually be the concepts themselves. This distinction (a mathematical analogue of the map-territory distinction in philosophy) actually is implicit in much of modern mathematics, when we make a distinction between an abstract version of a mathematical object, and a concrete representation (or model) of that object.

Quantum mechanics often predicts probabilities, and physicists often say that this means that nature is random. But as you can see, mathematicians deal with probability and random variables in completely deterministic models, so probability formulas do not imply true randomness.

The Schroedinger equation is deterministic and time-reversible. And yet quantum mechanics is usually understood as indeterministic and irreversible. Is this a problem? No, not really. As you can see, mathematicians are always using deterministic formulas to model probability. These issues drive physicists like Sean M. Carroll to believe in the many-worlds interpretation (MWI), but that conclusion is entirely mistaken.

Saturday, October 24, 2015

Delft experiment did not prove randomness

I posted about the Delft quantum experiment, but I skipped the randomness news. Here is ScienceDaily's description:

We have known since about 1930 that this Einstein local realism is wrong. Quantum mechanics teaches that electrons have wave-like properties that prohibit such definiteness.

The Delft experiment is just the latest in 90 years of work supporting quantum principles. Part of the acclaim for this experiment is in its approach to randomness:

The experiment gives the strongest refutation to date of Albert Einstein's principle of "local realism," which says that the universe obeys laws, not chance, and that there is no communication faster than light.This is a pretty good description. The so-called (and misnamed) "local realism" seemed less mysterious to Einstein and others by assuming that electrons are little spinning particles with definite positions, momenta, and spins, even when we cannot see them. Quantum mechanics could then be seen as describing our uncertainty about reality.

As described in Hanson's group web the Delft experiment first "entangled" two electrons trapped inside two different diamond crystals, and then measured the electrons' orientations. In quantum theory entanglement is powerful and mysterious: mathematically the two electrons are described by a single "wave-function" that only specifies whether they agree or disagree, not which direction either spin points. In a mathematical sense, they lose their identities. "Local realism" attempts to explain the same phenomena with less mystery, saying that the particles must be pointing somewhere, we just don't know their directions until we measure them.

We have known since about 1930 that this Einstein local realism is wrong. Quantum mechanics teaches that electrons have wave-like properties that prohibit such definiteness.

The Delft experiment is just the latest in 90 years of work supporting quantum principles. Part of the acclaim for this experiment is in its approach to randomness:

This amazing experiment called for extremely fast, unpredictable decisions about how to measure the electron orientations. If the measurements had been predictable, the electrons could have agreed in advance which way to point, simulating communications where there wasn't really any, a gap in the experimental proof known as a "loophole." To close this loophole, the Delft team turned to ICFO, who hold the record for the fastest quantum random number generators. ICFO designed a pair of "quantum dice" for the experiment: a special version of their patented random number generation technology, including very fast "randomness extraction" electronics. This produced one extremely pure random bit for each measurement made in the Delft experiment. The bits were produced in about 100 ns, the time it takes light to travel just 30 meters, not nearly enough time for the electrons to communicate. "Delft asked us to go beyond the state of the art in random number generation. Never before has an experiment required such good random numbers in such a short time." Says Carlos Abellán, a PhD student at ICFO and a co-author of the Delft study.This is quackery, as there is no proof that there is any such thing as randomness. I have explained it on this blog many times, such as here and in my 2015 FQXi essay.

For the ICFO team, the participation in the Delft experiment was more than a chance to contribute to fundamental physics. Prof. Morgan Mitchell comments: "Working on this experiment pushed us to develop technologies that we can now apply to improve communications security and high-performance computing, other areas that require high-speed and high-quality random numbers."If you want high-speed and high-quality random numbers, just toss a coin 512 times, and apply SHA-512 repeatedly. There is no known way to do better than that.

With the help of ICFO's quantum random number generators, the Delft experiment gives a nearly perfect disproof of Einstein's world-view, in which "nothing travels faster than light" and "God does not play dice." At least one of these statements must be wrong. The laws that govern the Universe may indeed be a throw of the dice.This is confusing. They have disproved Einstein's view of local realism being hidden variables. They certainly have not found anything going faster than light, and they have not proved that God plays dice. They used a random number generator to get results that look random. Such an experiment cannot possible prove the existence of random numbers.

Thursday, October 22, 2015

No-loophole Bell experiment hits newspapers

I discussed the latest Bell test experiment in August, and now it has hit the NY Times:

Two distant particles can be entangled in the sense that their states are related, and measuring one gives info about the other. But there has never been an experiment showing that an action on one particle has an effect on a particle outside its light cone.

No, this does not confirm that matter is not real until it is observed, or that time runs backwards, or that there is any action at a distane.

The failure of hidden variable theories also shows that the pair cannot be described by some sort of joint probability distribution on classical particles.

But that still leaves the possibility that the pair can be physically separated so that one has no effect on the other, but there is no mathematical description of that individual photon without being tied to the other. Measuring one photon affects our best mathematical description of the pair, but has no causal physical effect on the other photon.

That last possibility is what the quantum textbooks have said for 85 years, more or less.

As I keep saying on this blog, you need to get out of your head the idea that physics and math are the same thing. We have physical phenomena, and mathematical models and representations. Physics is all about finding mathematical models for what we observe, but there is no reason to believe that there is some underlying unobservable physical reality that is perfectly identical to some mathematically exact formulas. Einstein, Bohm, Bell, Tegmark, and others have gone down that path, and just come up with nonsense. Only by making such hidden variable assumptions does this experiment lead you to question locality.

Locality is not wrong. Hidden variables are wrong.

I just happened to watch this video titled Deepak Chopra destroyed by Sam Harris. Harris and Michael Shermer mock Chopra for citing quantum nonlocality, and say that none of them are competent to discuss it because none of them are physicists.

As much as Chopra might be guilty of babbling what Shermer calls "woo", I don't see how this NY Times article is any better. It is also claiming that nonlocality has been proved, and that matter doesn't really exist until you look at it. That is essentially the same as what Chopra was saying, except that he goes a step further and ties it into consciousness.

I would have thought that physicists would object to this sort of mysticism being portrayed as cutting edge physics in the NY Times. Yes, it is a good experiment, but it just confirms the theory that was developed in 1925-1930, and that received Nobel prizes in 1932-1933. My guess is that there is too much federal grant money going into quantum computing and other fanciful experiments, and no one wants to rock the boat.

Update: Lubos Motl piles on.

In a landmark study, scientists at Delft University of Technology in the Netherlands reported that they had conducted an experiment that they say proved one of the most fundamental claims of quantum theory — that objects separated by great distance can instantaneously affect each other’s behavior.No, this is not a blow to locality at all. It does confirm quantum mechanics, and close some loopholes in previous experiments, but that is all.

The finding is another blow to one of the bedrock principles of standard physics known as “locality,” which states that an object is directly influenced only by its immediate surroundings.

The Delft study, published Wednesday in the journal Nature, lends further credence to an idea that Einstein famously rejected. He said quantum theory necessitated “spooky action at a distance,” and he refused to accept the notion that the universe could behave in such a strange and apparently random fashion.This is hopelessly confused. Randomness and action-at-a-distance are two separate issues.

In particular, Einstein derided the idea that separate particles could be “entangled” so completely that measuring one particle would instantaneously influence the other, regardless of the distance separating them.

Einstein was deeply unhappy with the uncertainty introduced by quantum theory and described its implications as akin to God’s playing dice.

Two distant particles can be entangled in the sense that their states are related, and measuring one gives info about the other. But there has never been an experiment showing that an action on one particle has an effect on a particle outside its light cone.

The new experiment, conducted by a group led by Ronald Hanson, a physicist at the Dutch university’s Kavli Institute of Nanoscience, and joined by scientists from Spain and England, is the strongest evidence yet to support the most fundamental claims of the theory of quantum mechanics about the existence of an odd world formed by a fabric of subatomic particles, where matter does not take form until it is observed and time runs backward as well as forward.I guess I cannot blame John Markoff, the NY Times reporter, because the physicists themselves are reciting this mystical nonsense.

The researchers describe their experiment as a “loophole-free Bell test” in a reference to an experiment proposed in 1964 by the physicist John Stewart Bell as a way of proving that “spooky action at a distance” is real.

“These tests have been done since the late ’70s but always in the way that additional assumptions were needed,” Dr. Hanson said. “Now we have confirmed that there is spooky action at distance.”

No, this does not confirm that matter is not real until it is observed, or that time runs backwards, or that there is any action at a distane.

According to the scientists, they have now ruled out all possible so-called hidden variables that would offer explanations of long-distance entanglement based on the laws of classical physics.Finally, in the 9th paragraph, we have a correct statement. John von Neumann considered and rejected hidden variable theories in a 1930 textbook, and that has been the mainstream view ever since. Some fringe physicists, plus Einstein, have claimed that von Neumann's argument was wrong, but all attempts to revive hidden variables have been decisively rejected for an assortment of reasons.

The Delft researchers were able to entangle two electrons separated by a distance of 1.3 kilometers, slightly less than a mile, and then share information between them. Physicists use the term “entanglement” to refer to pairs of particles that are generated in such a way that they cannot be described independently. The scientists placed two diamonds on opposite sides of the Delft University campus, 1.3 kilometers apart.This is a decent description of the experiment. Yes, the photon pair is entangled in the sense that it is generated in such a way that it cannot be described as independent photons.

Each diamond contained a tiny trap for single electrons, which have a magnetic property called a “spin.” Pulses of microwave and laser energy are then used to entangle and measure the “spin” of the electrons.

The distance — with detectors set on opposite sides of the campus — ensured that information could not be exchanged by conventional means within the time it takes to do the measurement.

The failure of hidden variable theories also shows that the pair cannot be described by some sort of joint probability distribution on classical particles.

But that still leaves the possibility that the pair can be physically separated so that one has no effect on the other, but there is no mathematical description of that individual photon without being tied to the other. Measuring one photon affects our best mathematical description of the pair, but has no causal physical effect on the other photon.

That last possibility is what the quantum textbooks have said for 85 years, more or less.

As I keep saying on this blog, you need to get out of your head the idea that physics and math are the same thing. We have physical phenomena, and mathematical models and representations. Physics is all about finding mathematical models for what we observe, but there is no reason to believe that there is some underlying unobservable physical reality that is perfectly identical to some mathematically exact formulas. Einstein, Bohm, Bell, Tegmark, and others have gone down that path, and just come up with nonsense. Only by making such hidden variable assumptions does this experiment lead you to question locality.

Locality is not wrong. Hidden variables are wrong.

The tests take place in a mind-bending and peculiar world. According to quantum mechanics, particles do not take on formal properties until they are measured or observed in some way. Until then, they can exist simultaneously in two or more places. Once measured, however, they snap into a more classical reality, existing in only one place.Back to nonsense. Quantum mechanics is all about what can be observed. If you talk about what is not observed, such as saying that particles can be in two places at once, you have left quantum mechanics and entered the subject of interpretations. Some interpretations say that a particle can be in two places at once, and some do not. Some say that there is no such thing as a particle.

Indeed, the experiment is not merely a vindication for the exotic theory of quantum mechanics, it is a step toward a practical application known as a “quantum Internet.” Currently, the security of the Internet and the electronic commerce infrastructure is fraying in the face of powerful computers that pose a challenge to encryption technologies based on the ability to factor large numbers and other related strategies.Now they are claiming applications to quantum cryptography and quantum computers. No, quantum computers are not fraying the security of the internet and electronic commerce. No one has even made one true qubit, the smallest building block for a quantum computer. There is no security advantage to a quantum internet, and there is a long list of technical obstacles to even making an attempt. For example, it is extremely difficult to make a router to relay a single bit of info.

Researchers like Dr. Hanson envision a quantum communications network formed from a chain of entangled particles girdling the entire globe. Such a network would make it possible to securely share encryption keys, and know of eavesdropping attempts with absolute certainty.

A potential weakness of the experiment, he suggested, is that an electronic system the researchers used to add randomness to their measurement may in fact be predetermined in some subtle way that is not easily detectable, meaning that the outcome might still be predetermined as Einstein believed.No experiment can truly rule out super-determinism. This group is trying to show that superdeterminism, if it exists, would go back to the early universe. Isn't that what the super-determinists believe anyway? There are very few followers to such a bizarre theory anyway, so I would not know.

To attempt to overcome this weakness and close what they believe is a final loophole, the National Science Foundation has financed a group of physicists led by Dr. Kaiser and Alan H. Guth, also at M.I.T., to attempt an experiment that will have a better chance of ensuring the complete independence of the measurement detectors by gathering light from distant objects on different sides of the galaxy next year, and then going a step further by capturing the light from objects known as quasars near the edge of the universe in 2017 and 2018.

I just happened to watch this video titled Deepak Chopra destroyed by Sam Harris. Harris and Michael Shermer mock Chopra for citing quantum nonlocality, and say that none of them are competent to discuss it because none of them are physicists.

As much as Chopra might be guilty of babbling what Shermer calls "woo", I don't see how this NY Times article is any better. It is also claiming that nonlocality has been proved, and that matter doesn't really exist until you look at it. That is essentially the same as what Chopra was saying, except that he goes a step further and ties it into consciousness.

I would have thought that physicists would object to this sort of mysticism being portrayed as cutting edge physics in the NY Times. Yes, it is a good experiment, but it just confirms the theory that was developed in 1925-1930, and that received Nobel prizes in 1932-1933. My guess is that there is too much federal grant money going into quantum computing and other fanciful experiments, and no one wants to rock the boat.

Update: Lubos Motl piles on.

If you had some basic common sense and honesty, your conclusion would be to switch to abandon the classical way of thinking and switch to the quantum way of thinking. You would stop talking about "spooky" and "weird" and "nonlocal" things that may be implied by a classical model but it contradicts the insights that modern physics has actually made about the essence of the laws of Nature.I agree with him about the corrupt community. His post still says "transfer any transformation" instead of "transfer any information", soI guess he still has not proofread it.

But I guess that it's more convenient for this corrupt community to keep on playing with diamonds in totally stupid ways and write nonsensical hype about these games in the newspapers.

I won't proofread this text because it makes me too angry.

Wednesday, October 21, 2015

Against labeling privilege

Scott Aaronson has stumbled into a political dispute again. These are always fun to read. His views are that of a typical academic Jewish leftist, but he has an honest streak that causes him to choke on some of the more dogmatist leftist nonsense.

This time he refuses to go along with social science attacks on white male privilege, and he even declares that Marx and Freud were wrong. More importantly, he denounces those who idolize Marx and Freud. Previously, he approved of European countries keeping out immigrants in order to maintain their cultural and demographic identities.

This time he says Marx was wrong, and this:

Even Lubos Motl agrees this time, altho he can resist sniping at some other things Aaronson has said.

I try to avoid talking about the social sciences here, because if I did, then it would make the physicists look too good. I would rather point out where the hard sciences are going wrong, as there is some hope of fixing them.

On another political issue, Nature News reports:

Update: Also, more leftist censorship:

This time he refuses to go along with social science attacks on white male privilege, and he even declares that Marx and Freud were wrong. More importantly, he denounces those who idolize Marx and Freud. Previously, he approved of European countries keeping out immigrants in order to maintain their cultural and demographic identities.

This time he says Marx was wrong, and this:

In any case, I don’t see any escaping the fact that, for much of the 20th century, Marx and Freud were almost universally considered by humanist intellectuals to be two of the greatest geniuses who ever lived. To whatever extent that’s no longer true today, I’d see that as an opportunity for reflection about which present-day social dogmas might fare badly under the harsh gaze of future generations. Incidentally, from Newton till today, I can’t think of a single example of a similarly-catastrophic failure in the hard sciences, except when science was overruled politically (like with Lysenkoism, or the Nazis’ “Aryan physics”). The closest I can think of (and in terms of failure, it maybe rates a few milliMarxes) is Wegener and continental drift.

Even Lubos Motl agrees this time, altho he can resist sniping at some other things Aaronson has said.

I try to avoid talking about the social sciences here, because if I did, then it would make the physicists look too good. I would rather point out where the hard sciences are going wrong, as there is some hope of fixing them.

On another political issue, Nature News reports:

Canadian election brings hope for scienceThis sounds more like a left-wing editorial to me. It appears that all of our leading science journals are hopelessly infected with leftists.

Prime Minister Stephen Harper's Conservative party is ousted by the Liberals.

Canada’s middle-left Liberal party won a stunning victory on 19 October, unseating the ruling Conservative party after a tight three-way race. Researchers are hoping that the country’s new prime minister, Justin Trudeau, will help to restore government support for basic research and the country's tradition of environmentalism.

The Liberals have promised to amend some of the damage done to science and evidence-based policymaking in Canada under the Conservative government, which came into power in 2006. Under former Conservative prime minister Stephen Harper, research funding shifted to focus on applied science, many jobs and research centres were cut and the nation gained an international reputation for obstructing climate-change negotiations.

Update: Also, more leftist censorship:

A well-known French weatherman has been put on an indefinite “forced holiday” after writing a book criticizing climate-change research and saying that, even if it does exist, it will probably have some very positive effects, including more tourists, lower death rates, lower electricity bills in the winter, and higher-quality wine.

Philippe Verdier, famous for delivering nightly forecasts on the state-run news channel France 2, has authored a new book casting doubt on the research of world climatologists.

Tuesday, October 20, 2015

Philosopher complains about scientists again

Philosopher of pseudoscience Massimo Pigliucci writes:

If he thinks that his philosopher colleagues are useful to science, he should give an example. Instead he gives:

For the most part, today's physicists ignore philosophers as crackpots.

In the podcast, he argues that Newtonian mechanics is wrong, that there are no crucial experiments, that a science can become pseudoscience by not being progressive enuf, that philosophers need to define science in order to cut off funding for some projects, and that millions of Africans are dying because of pseudoscience.

When talking about this history of science, like others, he talks about Copernicus and Einstein. And of course the description is so over-simplified and distorted that his points are wrong.

His biggest gripe is with requiring scientific hypotheses to be falsifiable, as Karl Popper had argued. He hates the idea of science having a crisp definition, but wants philosophers to declare what is or is not science.

When George Ellis and Joe Silk wrote an op-ed in the prestigious Nature magazine, dramatically entitled “Defend the integrity of physics,” cosmologist Sean Carroll responded via Twitter (not exactly a prestigious scientific journal, but much more effective in public discourse) with, and I quote: “My real problem with the falsifiability police is: we don’t get to demand ahead of time what kind of theory correctly describes the world.” The “falsifiability police”? Wow.No, you don't get to be despised by being merely useless. Today's philosophers of science are despised because they are so openly anti-science, as I have documented on this blog many times.

This is actually all very amusing from the point of view of a philosopher of science. You see, our trade is often openly despised by physicists (the list of offenders is long: Steven Weinberg, Stephen Hawking, Lawrence Krauss, Neil deGrasse Tyson — see for instance this, or this), on the grounds that we are not useful to practicing scientists.

If he thinks that his philosopher colleagues are useful to science, he should give an example. Instead he gives:

But also, there is interesting disagreement among philosophers themselves on this matter. For instance, Richard Dawid, a philosopher at the University of Vienna, has argued in favor of embracing a “post-empirical” science, where internal coherence and mathematical beauty become the major criteria for deciding whether a scientific theory is “true” or not. I, for one, object to such a view of science, both as a scientist and as a philosopher.The mud-slinging is almost entirely one-sided, with the philosophers arguing against the validity of modern science.

A better course of action would be to get both scientists and philosophers to seat at the high table and engage in constructive dialogue, each bringing their own perspective and expertise to the matter at hand. It would be helpful, however, is this happened with a bit less mud slinging and a bit more reciprocal intellectual respect.

For the most part, today's physicists ignore philosophers as crackpots.

In the podcast, he argues that Newtonian mechanics is wrong, that there are no crucial experiments, that a science can become pseudoscience by not being progressive enuf, that philosophers need to define science in order to cut off funding for some projects, and that millions of Africans are dying because of pseudoscience.

When talking about this history of science, like others, he talks about Copernicus and Einstein. And of course the description is so over-simplified and distorted that his points are wrong.

His biggest gripe is with requiring scientific hypotheses to be falsifiable, as Karl Popper had argued. He hates the idea of science having a crisp definition, but wants philosophers to declare what is or is not science.

Saturday, October 17, 2015

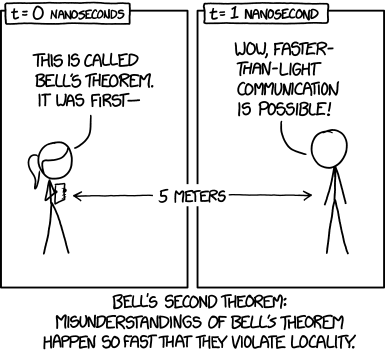

Comic about Bell misunderstandings

From xkcd comic. The point here is that descriptions of Bell's theorem often include attempts to convince you of the nonlocality of nature. Sometimes this is explicit, and sometimes it is couched in some spookiness language.

I have often criticized those who say that Bell's theorem implies nonlocality, such as here last month.

Thursday, October 15, 2015

Famous astronomer resigns

Big-shot UC Berkeley astronomer Geoffrey Marcy was just forced to resign. He was famous for finding a lot of alien, and has been mentioned as Nobel Prize candidate, but the prize is rarely given to astronomers.

Lubos Motl has a rant about this.

I do not know the details, but this seems weak:

His colleagues were ganging up on him, such as this letter complaining about NY Times coverage:

No, this is just a witch hunt. Apparently some people don't like Marcy because he grabs too much credit for alien planets, or leftist feminists want to make a statement about bringing down a big shot. I don't know.

What were all those complainers doing in 2001-2010 when the behavior was supposedly happened? Apparently they all thought it was just fine back then.

We have statutes of limitations for good reasons. Is is no longer practical or reasonable to draw judgments about possibly rude behavior ten years ago.

The NY Times draws this comparison:

Some people are also comparing to Tim Hunt, who lost his job over a false accusation about a public telling of a sexist joke.

Update: Lumo posts a 2nd rant.

Lubos Motl has a rant about this.

I do not know the details, but this seems weak:

This summer, in response to a formal complaint by four former students, the University of California concluded that Dr. Marcy had violated its policies on sexual harassment. The violations, spanning 2001 to 2010, ...Under Obama administration policy, this means only that there is a 51% or better chance that Marcy did something to cause offense.

His colleagues were ganging up on him, such as this letter complaining about NY Times coverage:

This article downplays Marcy's criminal behaviors and the profound damage that he has caused to countless individuals. It overlooks the continued trauma that Marcy inflicts to this day as a Berkeley professor, and it implicitly condones his predatory acts.What? As far as I can see, there is no criminal complaint, and no complaint at all about behavior in the last 5 years. If someone does have a criminal complaint, then the appropriate place to complain is to the police, and then grand jury, district attorney, and court. Marcy would have a chance to defend himself like any other defendant.

No, this is just a witch hunt. Apparently some people don't like Marcy because he grabs too much credit for alien planets, or leftist feminists want to make a statement about bringing down a big shot. I don't know.

What were all those complainers doing in 2001-2010 when the behavior was supposedly happened? Apparently they all thought it was just fine back then.

We have statutes of limitations for good reasons. Is is no longer practical or reasonable to draw judgments about possibly rude behavior ten years ago.

The NY Times draws this comparison:

Dr. Marcy’s troubles come at a time when sexism and sexual harassment are gaining prominence in science. Last December, the Massachusetts Institute of Technology cut ties with Walter Lewin, a popular lecturer, and deleted his physics course materials from its website after finding that he had sexually harassed at least one student.No, Lewin was not accused of harassing any MIT students or anyone else within 1000 miles of him. The complaint was from an over-30 woman on the other side of the world who watched a couple of his YouTube videos.

Some people are also comparing to Tim Hunt, who lost his job over a false accusation about a public telling of a sexist joke.

Update: Lumo posts a 2nd rant.

The geometrization of physics

The greatest story of 20th century (XXc) physics was the geometrization of physics. Special relativity caught on as it was interpreted as a 4-dimensional non-Euclidean geometry. Then general relativity realized gravity as curved spacetime. Quantum mechanics observations were projections in Hilbert space. Electromagnetism became a geometric gauge theory, and then so were the weak and strong interactions. By the 1980s, theorists were so sold on geometry that they jumped on the wildest string theories. The biggest argument for those theories was that the geometry is appealing.

Many people assume that Einstein was a leader in this movement, but he was not. When Minkowski popularized the spacetime geometry in 1908, Einstein rejected it. When Grossmann figured out to geometrize gravity with the Ricci tensor, Einstein wrote papers in 1914 saying that covariance is impossible. When a relativity textbook described the geometrization of gravity, Einstein attacked it as wrongheaded.

I credit Poincare and Minkowski with the geometrization of relativity. In 1905, Poincare had the Lorentz group and the spacetime metric, and at the time a Klein geometry was understood in terms of a transformation group or an invariant metric. He also implicitly used the covariance of Maxwell's equations, thereby integrating the geometry with electromagnetism. Minkowski followed where Poincare left off, explicitly treating world-lines, non-Eudlidean geometry, and covariance. Einstein had none of that. He only had an exposition of Lorentz's theorem, not covariance or spacetime or geometry.

For a historian's detailed summary of how Poincare and Minkowski developed the geometric view of relativity, see Minkowski, Mathematicians and the Mathematical Theory of

Relativity and The Non-Euclidean Style of Minkowskian Relativity by Scott Walter.

In 1900, physics textbooks were not even using vector notation. That is how far we have come. Today vectors are indispensable, but they are also more subtle than the average student realizes. Most physics books don't explain the geometry adequately.

Carlo Rovelli writes:

I don't know what he has against geometry. Geometry is central to most of XX century physics, and no good alternative has been found.

There is no evidence that physical space is discrete or fluctuating. We do not even have proof that electrons are discrete. Sure, some observables have discrete spectrum. But our theories for nature itself are continuous, not discrete, and make heavy use of geometry.

I have previously commented on Rovelli's defense of Aristotle, Einstein, and free will.

Many people assume that Einstein was a leader in this movement, but he was not. When Minkowski popularized the spacetime geometry in 1908, Einstein rejected it. When Grossmann figured out to geometrize gravity with the Ricci tensor, Einstein wrote papers in 1914 saying that covariance is impossible. When a relativity textbook described the geometrization of gravity, Einstein attacked it as wrongheaded.

I credit Poincare and Minkowski with the geometrization of relativity. In 1905, Poincare had the Lorentz group and the spacetime metric, and at the time a Klein geometry was understood in terms of a transformation group or an invariant metric. He also implicitly used the covariance of Maxwell's equations, thereby integrating the geometry with electromagnetism. Minkowski followed where Poincare left off, explicitly treating world-lines, non-Eudlidean geometry, and covariance. Einstein had none of that. He only had an exposition of Lorentz's theorem, not covariance or spacetime or geometry.

For a historian's detailed summary of how Poincare and Minkowski developed the geometric view of relativity, see Minkowski, Mathematicians and the Mathematical Theory of

Relativity and The Non-Euclidean Style of Minkowskian Relativity by Scott Walter.

In 1900, physics textbooks were not even using vector notation. That is how far we have come. Today vectors are indispensable, but they are also more subtle than the average student realizes. Most physics books don't explain the geometry adequately.

Carlo Rovelli writes:

WHAT SCIENTIFIC IDEA IS READY FOR RETIREMENT?This essay is included in a new book, This Idea Must Die: Scientific Theories That Are Blocking Progress (Edge Question Series), has a curious collection of opinions. It was promoted on a Science Friday broadcast.

Geometry

We will continue to use geometry as a useful branch of mathematics, but is time to abandon the longstanding idea of geometry as the description of physical space. The idea that geometry is the description of physical space is engrained in us, and might sound hard to get rid of it, but it is unavoidable; it is just a matter of time. Better get rid of it soon.

Geometry developed at first as a description of the properties of parcels of agricultural land. In the hands of ancient Greeks it became a powerful tool for dealing with abstract triangles, lines, circles, and similar, and was applied to describe paths of light and movements of celestial bodies with very great efficacy. ...

In reality, they are quantum entities that are discrete and fluctuating. Therefore the physical space in which we are immersed is in reality a quantum dynamical entity, which shares very little with what we call "geometry". It is a pullulating process of finite interacting quanta.

I don't know what he has against geometry. Geometry is central to most of XX century physics, and no good alternative has been found.

There is no evidence that physical space is discrete or fluctuating. We do not even have proof that electrons are discrete. Sure, some observables have discrete spectrum. But our theories for nature itself are continuous, not discrete, and make heavy use of geometry.

I have previously commented on Rovelli's defense of Aristotle, Einstein, and free will.

Monday, October 12, 2015

What's wrong with free will compatibilism

Klaas Landsman writes a new philosophy paper:

Apparently nearly all philosophers believe in determinism, and either reject free will or grudgingly accept a compatibilist view of free will.

All of this seems terribly out of date, as most physicists have rejected determinism for about a century. They say that quantum mechanics proves indeterminism, and the Bell test experiments are the most convincing demonstration.

In my opinion, they do not quite prove indeterminism, but they certainly prove that indeterminism is a viable belief, and that philosophers are wrong to assume that scientific materialism requires determinism.

Landsman argues that quantum mechanics is contrary to compatibilism. The Conway-Kochen argument is that there is some sort of super-determinism (that almost no one believes), or elections are indeterministic (ie, exhibit random behavior)and humans have the free will to choose experimental design (ie, also exhibiting behavior that seems random to others).

The (Strong) Free Will Theorem of Conway & Kochen (2009) on the one hand follows from uncontroversial parts of modern physics and elementary mathematical and logical reasoning, but on the other hand seems predicated on an unde?ned notion of free will (allowing physicists to ‘freely choose’ the settings of their experiments). Although Conway and Kochen informally claim that their theorem supports indeterminism and, in its wake, a libertarian agenda for free will, ...For centuries, philosophers have argued that scientific materialism requires determinism. And common sense requires free will. So they dreamed up the concept of compatibilism to say that free will is compatible with determinism.

Therefore, although the intention of Conway and Kochen was to support free will through their theorem, what they actually achieved is the opposite: a well-known, philosophically viable version of free will now turns out to be incompatible with physics!

Apparently nearly all philosophers believe in determinism, and either reject free will or grudgingly accept a compatibilist view of free will.

All of this seems terribly out of date, as most physicists have rejected determinism for about a century. They say that quantum mechanics proves indeterminism, and the Bell test experiments are the most convincing demonstration.

In my opinion, they do not quite prove indeterminism, but they certainly prove that indeterminism is a viable belief, and that philosophers are wrong to assume that scientific materialism requires determinism.

Landsman argues that quantum mechanics is contrary to compatibilism. The Conway-Kochen argument is that there is some sort of super-determinism (that almost no one believes), or elections are indeterministic (ie, exhibit random behavior)and humans have the free will to choose experimental design (ie, also exhibiting behavior that seems random to others).

To their defence, it is fair to say that Conway and Kochen never intended to support compatibilist free will in the first place. It is hard to find more scathing comments on compatibilism than the following ones by Conway:I do not expect any of this to have any influence on philosophers. They refuse to accept true (libertarian) free will.Compatibilism in my view is silly. Sorry, I shouldn’t just say straight off that it is silly. Compatibilism is an old viewpoint from previous centuries when philosophers were talking about free will. The were accustomed to physical theory being deterministic. And then there’s the question: How can we have free will in this deterministic universe? Well, they sat and thought for ages and ages and ages and read books on philosophy and God knows what and they came up with compatibilism, which was a tremendous wrenching effect to reconcile 2 things which seemed incompatible. And they said they were compatible after all. But nobody would ever have come up with compatibilism if they thought, as turns out to be the case, that science wasn’t deterministic. The whole business of compatibilism was to reconcile what science told you at the time, centuries ago down to 1 century ago: Science appeared to be totally deterministic, and how can we reconcile that with free will, which is not deterministic? So compatibilism, I see it as out of date, really. It’s doing something that doesn’t need to be done. However, compatibilism hasn’t gone out of date, certainly, as far as the philosophers are concerned. Lots of them are still very keen on it. How can I say it? If you do anything that seems impossible, you’re quite proud when you appear to have succeeded. And so really the philosophers don’t want to give up this notion of compatibilism because it seems to damned clever. But my view is it’s really nonsense. And it’s not necessary. So whether it actually is nonsense or not doesn’t matter.

Monday, October 5, 2015

Striving for a Faithful Image of Nature

I wrote a FQXi essay doubting that nature can be faithfully represented by mathematics.

H. C. Ottinger is writing a QFT textbook dedicated to proving that it is possible:

So is QFT a mathematically faithful image of nature and an absolutely correct theory or not?

I guess not.

These theoretioal physicists all seem to assume mathematically perfect theory of nature is going to be found. The book "Dreams of a Final Theory" by S. Weinberg promotes that idea. The LHC was supposed to get the experimental evidence.

Here are the book's postulates:

Yes, Democritus and others correctly guessed that matter was made of atoms. But they did have some evidence. They observed that water could be frozen, boiled, or polluted, and then returned to being water, without any lasting damage. Likewise with other elements. Water is not technically an element, but they would have been even more impressed if they decomposed it into gases, and then combined them to make water again. Being made of atoms was the most plausible explanation of this phenomenon.

Motl continues:

And we now know from unpublished papers that explaining the Mercury perihelion precession was one of the biggest motivators for general relativity, if not the biggest.

Max Tegmark comes squarely on the side of mathematics perfectly describing the universe, and not just being approximations, and he explains in this recently-reprinted 2014 essay:

Note that Tegmark says that time is reversible, while the above QFT textbook says that QFT features the irrersibility of time.

Update: Lawrence M. Krauss writes in the New Yorker, where he seems to be the resident physicist:

H. C. Ottinger is writing a QFT textbook dedicated to proving that it is possible:

Quantum Field Theory as a Faithful Image of Nature ...QFT is our best candidate as a mathematical theory of everything, but this text confuses me. The title indicates that QFT is a faithful image of nature. But then he endorses Boltzmann's philosophy that rejects such an absolutely correct theory, and strives for theories that are as simple and accurate as possible. Then later he indicates that maybe we will have a faithful theory in the future.

This book is particularly influenced by the epistemological ideas of Ludwig Boltzmann: “... it cannot be our task to find an absolutely correct theory but rather a picture that is as simple as possible and that represents phenomena as accurately as possible” (see p. 91 of [8]). This book is an attempt to construct an intuitive and elegant image of the real world of fundamental particles and their interactions. To clarify the words picture and image, the goal could be rephrased as the construction of a genuine mathematical representation of the real world. ...

Let us summarize what we have achieved, which mathematical problems remain to be solved, and where we should go in the future to achieve a faithful image of nature for fundamental particles and all their interactions that fully reflects the state-of-the-art knowledge of quantum field theory. Sections 3.2 and 3.3 may be considered as a program for future work.

So is QFT a mathematically faithful image of nature and an absolutely correct theory or not?

I guess not.

These theoretioal physicists all seem to assume mathematically perfect theory of nature is going to be found. The book "Dreams of a Final Theory" by S. Weinberg promotes that idea. The LHC was supposed to get the experimental evidence.

Boltzmann’s philosophical lectures attracted huge audiences (some 600 students) and so much public attention that the Emperor Franz Joseph I (reigning Austria from 1848 to 1916) invited him for a reception at the Palace to express his delight about Boltzmann’s return to Vienna. So, Boltzmann was not only a theoretical physicist of the first generation, but also an officially recognized part-time philosopher. ...He says that the Duhem-Quine thesis, which was supposedly one of the great ideas of XX century philosophy of science, was just a rehash of what Boltzmann said.Besides we must admit that the purpose of all science and thus of physics too, would be attained most perfectly if one had found formulae by means of which the phenomena to be expected could be unambiguously, reliably and completely calculated beforehand in every special instance; however this is just as much an unrealisable ideal as the knowledge of the law of action and the initial states of all atoms.

Phenomenology believed that it could represent nature without in any way going beyond experience, but I think this is an illusion. No equation represents any processes with absolute accuracy, but always idealizes them, emphasizing common features and neglecting what is different and thus going beyond experience.

Here are the book's postulates:

First Metaphysical Postulate: A mathematical image of nature must be rigorously consistent; mathematical elegance is an integral part of an appealing image of nature.There are sharp differences among physicists over whether their mission is to find better and better mathematical approximations, or to find the mathematically perfect theory valid to all scales. Lubos Motl rips Larry Krauss on this issue:

Second Metaphysical Postulate: Physical phenomena can be represented by theories in space and time; they do not require theories of space and time, so that space and time possess the status of prerequisites for physical theories.

Third Metaphysical Postulate: All infinities are to be treated as potential infinities; the corresponding limitlessness is to be represented by mathematical limiting procedures; all numerous infinities are to be restricted to countable.

Fourth Metaphysical Postulate: In quantum field theory, irreversible contributions to the fundamental evolution equations arise naturally and unavoidably.

But let me get to the main question, namely whether a true theory that works at all scale does exist or can exist.Motl defines string theory to include all the successful developments of QFT, while Krauss is sticking to the string theory vision that all that will someday be derivable from some 10 or 11 dimensional geometry.

Krauss writes: We know of no theory that both makes contact with the empirical world, and is absolutely and always true.

[Motl] Well, you don't because you're just a bunch of loud and obnoxious physics bashers. But what's more important is that the state-of-the-art theoretical physicists do know a theory that both makes a contact with the empirical world and is – almost certainly – absolutely and always true. It's called string theory.

[Krauss] This theory, often called superstring theory, produced a great deal of excitement among theorists in the 1980s and 1990s, but to date there is not any evidence that it actually describes the universe we live in.

[Motl] This is absolutely ludicrous. The amount of evidence that string theory is right is strictly greater than the amount of evidence that quantum field theory is right. Because string theory may be shown to reduce to quantum field theory with all the desired components and features at the accessible scales, it follows that they're indistinguishable from the empirical viewpoint. That's why Krauss' statement is exactly as ludicrous as the statement that there exists no evidence backing quantum field theory.

Needless to say, his statement is even more ludicrous than that because the evidence backing string theory is actually more extensive than the evidence supporting quantum field theory. Unlike renormalizable quantum field theory that bans gravity, string theory predicts it. And one may also mention all the Richard-Dawid-style "non-empirical" evidence that string theory is correct.

OK, near the end, where he already admits that there exists a theory that makes his initial statements about non-existence wrong if the researchers in the subject are right, we read:

[Krauss] While we don’t know the answers to that question [whether there is a theory that is valid without limitations of scales], we should, at the very least, be skeptical. There is no example so far where an extrapolation as grand as that associated with string theory, not grounded by direct experimental or observational results, has provided a successful model of nature. In addition, the more we learn about string theory, the more complicated it appears to be, and many early expectations about its universalism may have been optimistic.

The claim that "there has been no theory not grounded by direct experimental or observational results that has provided a successful model of Nature" is clearly wrong. Some ancient philosophers – and relatively modern chemists – have correctly guessed that the matter is composed of atoms even though there seemed to be no chance to see an individual atom or determine its size, at least approximately.

But it was correctly "guessed", anyway.

Yes, Democritus and others correctly guessed that matter was made of atoms. But they did have some evidence. They observed that water could be frozen, boiled, or polluted, and then returned to being water, without any lasting damage. Likewise with other elements. Water is not technically an element, but they would have been even more impressed if they decomposed it into gases, and then combined them to make water again. Being made of atoms was the most plausible explanation of this phenomenon.

Motl continues:

Also, both special and general theory of relativity were constructed without any direct experimental or observational results. The Morley-Michelson experiment could have "slightly" invalidated the previous statement except that Einstein has always claimed that he wasn't aware of that experiment at all and it played no role in his derivations. Similarly, general relativity was constructed by purely theoretical methods. The perihelion precession of Mercury was nice but it was just a "by the way" observation that Einstein noticed. This anomaly was in no way the "soil" in which the research of Einstein that produced GR was "grounded".No, this is not correct. Maybe Einstein ignored Michelson-Morley, but it was crucial for the FitzGerald length contraction, Larmor time dilation, relativistic mass, Lorentz transformation, and four dimensional spacetime geometry, as the papers announcing the discovery of these concepts all cited the experiment as being crucial. Even Einstein wrote in 1909 that the experiment was crucial to special relativity, if not to his own work, which was mainly to assume what Lorentz and Poincare had proved.

And we now know from unpublished papers that explaining the Mercury perihelion precession was one of the biggest motivators for general relativity, if not the biggest.

Max Tegmark comes squarely on the side of mathematics perfectly describing the universe, and not just being approximations, and he explains in this recently-reprinted 2014 essay:

For the bird—and the physicist—there is no objective definition of past or future. As Einstein put it, “The distinction between past, present, and future is only a stubbornly persistent illusion.” ...You might expect Motl to agree with this, but he attacks it fort he incoherent nonsense that it is.

The idea of spacetime does more than teach us to rethink the meaning of past and future. It also introduces us to the idea of a mathematical universe. Spacetime is a purely mathematical structure in the sense that it has no properties at all except mathematical properties, for example the number four, its number of dimensions. In my book Our Mathematical Universe, I argue that not only spacetime, but indeed our entire external physical reality, is a mathematical structure, which is by definition an abstract, immutable entity existing outside of space and time.

What does this actually mean? It means, for one thing, a universe that can be beautifully described by mathematics. ...

That our universe is approximately described by mathematics means that some but not all of its properties are mathematical. That it is mathematical means that all of its properties are mathematical; that it has no properties at all except mathematical ones.

Note that Tegmark says that time is reversible, while the above QFT textbook says that QFT features the irrersibility of time.

Update: Lawrence M. Krauss writes in the New Yorker, where he seems to be the resident physicist:

The physicist Richard Feynman once suggested that nature is like an infinite onion. With each new experiment, we peel another layer of reality; because the onion is infinite, new layers will continue to be discovered forever. Another possibility is that we’ll get to the core. Perhaps physics will end someday, with the discovery of a “theory of everything” that describes nature on all scales, no matter how large or small. We don’t know which future we will live in.As usual, Feynman was a voice of sanity. Today's physicists are conceited enuf to think that they can find the core, in spite of all the contrary evidence.

Thursday, October 1, 2015

Defending logical positivism

Logical positivism is a philosophy of science that was popular in the 1930s, and largely abandoned in the following decades. It is a variant of positivism, a view that is peculiar because it is widely accepted among scientists today, but widely rejected among philosophers of science. Wikipedia says logical positivism is "dead, or as dead as a philosophical movement ever becomes". [1,2]

I defend logical positivism, based on my understanding of it, as the best way to understand math and science today.

The core of logical positivism is to classify statements based on how we can determine their truth or falsity. There are three types.

1. Math. This includes mathematical theorems, logic, tautologies, and statements that are true or false by definition.

2. Science. This includes observations, measurements, induction, approximations, hypothesis testing, and other empirical work.

3. All else - mysticism, ethics, subjective beauty, opinion, religion, metaphysics, aesthetics, etc.

Mathematical truth is the highest kind of truth, and is given by logical proofs. It goes back to Euclid and the ancient Greeks, and was not independently discovered by anyone else, as far as I know. When a theorem is proved, you can be 100% sure of it.

You might think that mathematical knowledge is empirical, but it has not been for two millennia. You might also have been told Goedel's work somehow undermined the axiomatic method. The truth is more nearly the opposite. Math means logical proof. If I say that the square root of two is irrational, that means that I have a proof.

Science arrives at somewhat less certain truths using empirical methods. A conclusion might be that the Sun will rise in the East tomorrow morning. This is backed up by many observations, as well as theory that has been tested in many independent ways. Science also makes predictions with lesser confidence. These are considered truths, even though they are not as certain as mathematical truths.

There are many other statements that are not amenable to mathematical proof or empirical verification. For example, I may be of the opinion that sunsets are beautiful. But I do not have any way of demonstrating it, either mathematically or empirically. If you tell me that you disagree, I would be indifferent because there is no true/false meaning attached to the statement. It is meaningless, in that sense.

So far, this is just a definition, and not in serious dispute. What makes logical positivists controversial is their strong emphasis on truth. They tend to be skeptical about aspects of scientific theories that have not been tested and verified, and they may even be contemptuous of metaphysical beliefs. Others don't like it when their opinions are called meaningless.

Logical positivism is part of the broader philosophy of positivism that emphasizes empirically verifying truths. Logical positivism is a kind of positivism that recognizes math as non-empirical truth. When speaking of science, positivism and logical positivism are essentially the same. [3]

Logical positivists do distinguish between logical and empirical truths. I. Kant previously drew a distinction between analytic and synthetic statements, with analytic being like logical, and synthetic being like empirical. However he classified 7+5=12 as synthetic, which makes no sense to me. So his views have little to do with logical positivism.

Some statements might be a mixture of the three types, and not so easily classified. To fully understand a statement, you have to define its terms and context, and agree on what it means for the statement to be true. For the most part, mathematical truths are the statements found in math textbooks and journals, and scientific truths are the ones found in science textbooks and journals.

Enabled by XX century progress

I might not have been a positivist before the XX century, when broad progress in many fields provided a much sharper view of the known world.

In math, the major foundational issues got settled, with the work of Goedel, Bourbaki, and many others. Nearly all mathematics, if not all, has proofs that could be formalized in some axiom system like ZFC. [4]

This opinion may surprise you, as there is a common philosophical view that the pioneering work on logicism by G. Frege, B. Russell, and others was disproved by Goedel. One encyclopedia says: "On the whole the attempt to reduce mathematics to logic was not successful." [5] Scientific American published a 1993 article on "The Death of Proof". Not many mathematicians would agree.

The math journals publish theorems with proofs. The proofs are not written in a formal language, but they build on an axiomatic foundation and could be formalizable. It is not possible to have a Turing machine to determine the truth or falsity of any mathematical statement, so math is not reducible to logic in that sense. But every math theorem is also a logical theorem of axiomatic set theory, so in that sense, math has been reduced to logic.

In physics, advances like relativity and quantum mechanics expanded the scope of science from the sub-atomic to the galaxy cluster. Also these theories went fully causal, so that physics could hope to give a complete explanation of the workings of nature. The fundamental forces have quantitative theories that are as accurate as we can measure.

Again, this opinion may surprise you, as philosophers commonly deny that fundamental physics has anything to say about causality. [6] If that were true, it would rob physics of nearly all of its explanatory and predictive power. In my opinion, incorporating causality into fundamental physics was the most important intellectual achievement in modern times. Before 1850, we did not have causal explanations for any of the four fundamental forces. Now we have fully causal theories for all four, and those theories have led to the most striking technological progress in the history of the planet.

In biology, the discovery and deciphering of DNA and the genetic code meant that life could be included in the grand reductionist scientific vision.

Medicine, statistics, economics, and other fields made so much progress that XX century knowledge is the only thing to study.

With all the advances of the last century there is hardly any need to believe in unproven ideas, as ancient people might have attributed storms to the gods being angry. Today one can stick with positive knowledge, and have a coherent and satisfactory view of how the world works.

Philosophical criticisms

Philosophers have turned against positivism, and especially logical positivism. They consider the subject dead. The most common criticism is to attack metaphysical beliefs that logical positivists supposedly have. For example, some say that logical positivists cannot prove the merits of their views.

This is a bit like criticizing atheists for being unable to prove that there is no god.

Logical positivists do not accept metaphysics as truth. No argument about metaphysics can possibly refute logical positivism.

Some say that Goedel disproved the logical part of logical positivism. That is not true, as explained above. Goedel gave a clearer view of the axiomatic method, but did not undermine it.

The argument that logical positivism is self-defeating is fallacious in the same way as the argument that Goedel's logic is self-defeating. It misses the point about what the subject matter is. It is like saying: The imaginary numbers prove that the real numbers are incomplete, and therefor real analysis is invalid. No, the imaginary numbers mere shed light on what non-real numbers might look like.

Another line of criticism comes from what I call negativism. In this view, there is no such thing as positive truth. There are only falsified theories, and theories that have not yet been falsified. In effect, they say you can only prove a negative, not a positive. [7]

This seems backwards to me. If Newtonian theory is good enough to send a man to the Moon, then obviously there is something right about it. It is a true theory, and valid where it applies. It is not wrong just because it is not perfectly suited to all tasks. Pick up any science journal, and you will find it filled with positive knowledge.

Some science historians claim that the progress of science was fueled by anti-positivists who were willing to stake out beliefs that went beyond what could be empirically demonstrated. For example, some positivists were skeptical about the existence of atoms, long after others were convinced. A later example is the 1964 discovery of quarks, which are never seen in isolation. Murray Gell-Mann got the 1969 Nobel Prize for it, but the official citation did not mention quarks, and the introduction to his Nobel lecture only mentioned the great heuristic value of quarks. His lecture said that existence was immaterial, and that "quark is just a notion so far. It is a useful notion, but actual quarks may not exist at all." [8] His original paper only called it a "schematic model". Today quarks are commonly considered elementary particles.

Gell-Mann and the Nobel committee were being positivist, because without direct evidence for quarks, it is not necessary to believe that a quark is any more than a useful heuristic notion. Anti-positivists would say that, in retrospect, Gell-Mann was being unduly cautious.

It is not necessary to believe quarks are real particles, as there is barely any benefit to thinking of light as photons. Planck's original 1900 idea was that light was absorbed and emitted as discrete quanta, and that idea is still good today. You have to face some tricky paradoxes if you think of light always being particles.

A more recent example of positivism is the 2011 Nobel Prize for the discovery of the dark energy that permeates the universe. The recipients and most other astrophysicists refuse to say what it is, except that the phrase is a shorthand for the supernovae evidence for the accelerating expansion of the universe. When asked to speculate, one of them says "Reality is the set of ideas that predicts what you see." [9] Again, a positivist view prevails.

It is hard to find examples where positivist views have held back scientific progress, or where anti-positivist views have enabled progress. Weinberg claims to have a couple of examples, [10] such as J.J. Thomson getting credit (and the 1906 Nobel prize) for the discovery of the electron, even though a positivist did better work. Supposedly Thomson's non-positivism allowed him to see the electron as a true particle, and investigate its particle-like properties. Of course we now know that the electron is not truly a particle, and his son later got the Nobel prize for showing that it was a wave. Or a wave-like particle, or a particle-like wave, or a quantized electric field, or a renormalized bare charge, or whatever you want to call it. The positivist would say that the electron is defined by its empirical properties and our well-accepted quantum mechanical theories, and words like particle and wave are just verbal shortcuts for describing what we know about electrons.

Weinberg's larger point is that modern philosophy has become irrelevant and possibly detrimental to science. I would trace the problem to the rejection of logical positivism. That is when philosophers decided that they were no longer interested in truth, as understood by mathematicians and scientists.

Criticism of positivism also comes from the followers of T.S. Kuhn's paradigm shift theory. He denied that science was making progress towards truth, and portrayed the great scientific revolutions as irrational leaps to a theory with no measurable advantages. His favorite example was the /Copernican revolution, where a 1543 belief in the Earth's revolution around the Sun had no empirical advantages. [11]

Kuhn was influenced by the anti-positivist Michael Polanyi, who also drew anti-positivist lessons from Copernicus, and who claimed that relativity was discovered from pure speculation, intuition, and gedanken (thought) experiment, rather than from empiricism or real experiment. [12]

Polanyi's argument is as absurd as it sounds. The early papers on relativity had many startling consequences, including length contraction, time distortion, and mass increase, and every paper on these developments described them as consequences of the Michelson-Morley experiment. So do most of the textbooks, both now and a century ago. That was the crucial experiment. The sole support for Polanyi was Einstein's reluctance to credit others, but even some of Einstein's papers described the experiment as being crucial to relativity. Also, the prediction of mass increase with velocity was tested before Einstein wrote his first paper on the subject.

A positivist is likely to agree that the Ptolemy and Copernicus systems had little empirical difference. Indeed, general relativity teaches that geocentric and heliocentric coordinates are equally valid, as the physical equations can be written in any choice of coordinates.

Kuhn's paradigm shift theory really only applies to ancient debates over unobservables. XX century advances like relativity and quantum mechanics do not match his description at all, as they offered measurable advantages that a rational objective observer could appreciate.